Are We Ready for Driverless Cars?

Professor Hemant Bhargava says not yet

(Editor's note: Professor Bhargava's blog originally appeared on Medium on May 5, 2019)

Are we ready for driverless cars?

Short answer, No.

Tesla, the new kid on the block, has shaken up the automobile industry during the last decade, with beautiful, high-performance electric cars (and a supercharging infrastructure to go with them), often called “computers on wheels” for their boundary-pushing use of computing technology and algorithms. Tesla cars have industry-leading self-driving capabilities, a feature Tesla has erroneously referred to as “autopilot” (see my 2016 article on this).

Meanwhile, a multitude of technology companies, car companies, and startups — e.g., Google (Waymo), Uber, and GM — have invested billions of dollars and made substantial research progress in fully autonomous driving. These companies have collected huge amounts of driving data, built massive machine learning algorithms, and deployed prototypes and test cars. Still, Tesla may be the company with the biggest achievements and boldest prediction in this arena.

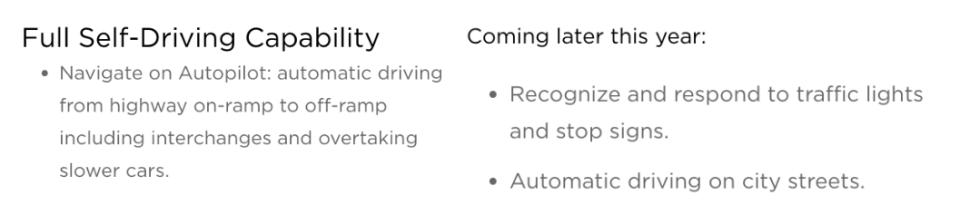

As of now, Tesla has over half a million autopilot-enabled cars that have logged over 1.36 billion supervised self-driving miles on real roads (data from Lex Fridman at MIT’s human-centered artificial intelligence). Tesla cars sold today offer a $6,000 upgrade option that claims, as this screenshot from Tesla’s order placement software shows,

Tesla’s bold and visionary founder and leader, Elon Musk, announced that “by the middle of 2020, Tesla’s autonomous system will have improved to the point where drivers will not have to pay attention to the road.” Indeed, Musk is placing big financial hopes on creating an uber-Uber based on self-driving technology: “A year from now, we’ll have over a million cars [robotaxis] with full self-driving, software… everything.” (The Verge, April 22, 2019)

But, is this really going to happen, and are we ready for it?

I don’t think so, and here are six reasons why.

1. Self-Driving Technology isn’t Quite That Good Yet

This is an empirical statement (rather than about the boundaries of artificial intelligence). As a Tesla driver, I enjoy its self-driving capabilities. Driving can be physically and mentally taxing, so it is a relief when you can unwind for a bit. Indeed, the technological “eyes” can see things I don’t, and the self-driving algorithm has frequently foiled mistakes. However, I also know that I may suddenly and rapidly have to regain control of the car. I have experienced many situations where the car’s lack of appropriate and timely action, rang alarm bells and made me pre-emptively take control.

So, the reality is that self-driving allows you to be 60-95% relaxed at times, even 100% relaxed on short stretches (roads with excellent infrastructure, no visible potential problems, etc.), but overall it is not a system you can shut your eyes and fully rely on. Don’t let anyone fool you that it is. (Just read the fine print in Tesla’s self-driving software agreement!) And it’s not getting there soon. What we know is this: Tesla has demonstrated the value of supervised self-driving capability, but the performance of supervised self-driving provides evidence that it is not yet good enough for full self-driving. In fact, what’s wonderful about Tesla’s self-driving feature is how easily, quickly and intuitively, it cedes control back to the driver. This ability to switch control flawlessly is a crucial part of an autopilot toolbox (the Boeing 737 Max tragedies are a vivid and sad illustration).

2. The Right Benchmark for AI: Average Human, or the Top Performers?

Proponents of full self-driving observe that, even with its imperfections today, a computer can drive better than the average human. I’m willing to accept that claim. But that’s not good enough for me, and I don’t think this should be the right expectation for a machine to replace humans. Humans come in all shapes and sizes, i.e., some drivers have better skills and physical capability than others. If we’re going to evaluate self-driving technology, the right benchmark is the top 5% of human drivers (I pick 5% rather than 1% , or “the best”, to allow for some variety and subjectivity in defining the “best” drivers).

In games like chess and go, AI (artificial intelligence) algorithms have — by dint of brute force computation and machine learning over lots of data — outperformed the best humans, the world champions. However, driving is a different challenge for machines. Algorithms act in ways they’ve been told to act, but real-world driving can pose thousands of rare new circumstances. The quality of a human’s response to new situations is based on their innate senses, fundamental intelligence and reasoning power, common sense and general knowledge — but also alertness, physical skills, reflexiveness, etc. Because of these latter factors, an AI machine’s response could well exceed the “average human response” (despite humans possessing fundamental intelligence), however, the fact is that a smart, alert and responsive human has the potential to respond correctly even when a machine would fail.

3. Our Civic Infrastructure isn’t Ready

Among the impediments to fully self-driving software are two factors that have nothing to do with machine learning or artificial intelligence algorithms, but rather are about our civic transportation infrastructure. First, our roads, lane separators, directional barriers, etc. are simply not 100% consistent and standardized in the ways that algorithms would like them to be. Construction zones, debris within the lanes, blinding rain … these are some circumstances where the self-driving technology itself begs off. Second, our navigation guideposts (traffic lights, warning signs, indicators of sharp curves, slick roads etc.) are all designed for human consumption rather than mechanical eyes. For self-driving technology to be effective, the software and hardware technology in the car needs to receive these signals digitally, rapidly, and unambiguously. It should be clear that a civic infrastructure upgrade that would allow for universal fully self-driving is many years, or decades, away.

Hand-in-glove with this civic infrastructure argument is the manner in which humans will likely respond to AI-driven driverless cars. So, consider a set of downtown streets which have intersections and marked crosswalks for pedestrians. Outside of these areas, the understanding is that cars can move safely, and pedestrians should not jaywalk. But, knowing that a driverless car will slow down or halt for a jaywalker, what if pedestrians start crossing the road anywhere they please, or perhaps even just to “irritate” a driverless car? This example is just one among many circumstances in which the nature of interactions between driverless cars and humans needs to be understood. Who can claim that we’ve done this sufficient research on this front?

4. We don’t Have the Right Model for Evaluating Fully Autonomous Driving

The above three sections are my personal opinion and analysis of whether the time is right for fully self-driving cars. But let’s ask a different, more general, question: has anyone proven that they actually work “reasonably well”? The following report, from Tesla in October 2018, exemplifies the perspective of proponents: Tesla reported a rate of “one accident for every 3.34 million miles driven when the autopilot was engaged” which is substantially better than “one auto crash for every 492,000 miles driven in the U.S. without an autonomous assist” (based on data from the National Highway Traffic Safety Administration).

Is this truly the right way to evaluate self-driving technology? Perhaps not, once we note that autonomous mode is engaged only in the most favorable conditions. To add to this, it is plausible that several potential autonomous-mode accidents were averted because the driver or the car disengaged just in time. Lex Fridman at MIT’s human-centered artificial intelligence reports that only about 10% of all Tesla miles are in self-driving mode (a number that comports with my own experience). This is what one would normally call “selection bias” in empirical work: one group’s superior performance over a second group was merely (or partially) because the first selected better situations to play in. You might counter that the self-driving accident rate is so low (1/8th of the normal) that self-driving performs about the same even after correcting for this selection bias. But is that what we want? A fully self-driving technology that does just the same as average human drivers, and, moreover, has no ability to use innate human judgment or inference in unanticipated, unforeseen, or never-experienced situations? Wouldn’t we want a 5x or 10x performance multiplier before we place our trust and lives on this technology?

5. We don’t Have the Right Regulations and Ethics

This point is very important, but I will keep it brief because others have made it well (e.g., see this article in Nature). Driverless cars will respond in ways they’ve been programmed. What choices will they then make (say, between staying on course and killing 3 people in their way vs. swerving and killing one who wasn’t)? When accidents occur, who’s to blame — e.g., the township with the pothole in the road, the owner of the vehicle, or the software programmer who failed to design the car to handle deep potholes?

6. (AI+Driver) > (AI | Driver)

I have made this point previously: that self-driving and AI capabilities should “augment” the human driver, not replace him or her. But this point needs reiteration with respect to what is the right benchmark for evaluating the readiness of fully autonomous driving. Should we compare fully autonomous driving to fully manual driving? Or, should we compare fully autonomous driving to AI-assisted manual driving, the latter incorporating numerous features that have proven value but which still work under the control of the human driver. This set includes lane change warnings, adaptive speed control, multiple cameras and sensors — and other features that assist, but do not eliminate, the driver. So, to the question “are we ready for fully self-driving cars?” shouldn’t the test be does fully autonomous driving perform better than (one of the best) AI-assisted human drivers?

These are 6 good reasons. There are others: e.g., the potential for hacking into driverless cars (and our lack of research, experience, or regulations in this area), and the lack of explainability in a machine-learning based algorithm.

To add a little more perspective to this article, I’m a technology enthusiast, Tesla owner, and generally an Elon Musk fanboy.

[1] An interview with Matt Drange (of “The Information”) inspired this blog. [Back]

[2] Tesla robo-taxis? This is a bad business idea. Imagine wealthy Tesla owners who put down $100K for a car, then are willing to give it away to strangers in exchange for $30 per day? [Back]